The Hidden Connections: Understanding the World Through Systems Thinking

As an engineer, much of my work involves navigating software systems full of interconnected components. One of the hardest parts of this work is handling edge cases - those unexpected scenarios that surface at the boundaries of what you designed for. The tricky thing about edge cases is that fixing one often creates a new problem somewhere else. You patch a timeout issue in one service, and suddenly a downstream service starts behaving differently because it was relying on that timeout.

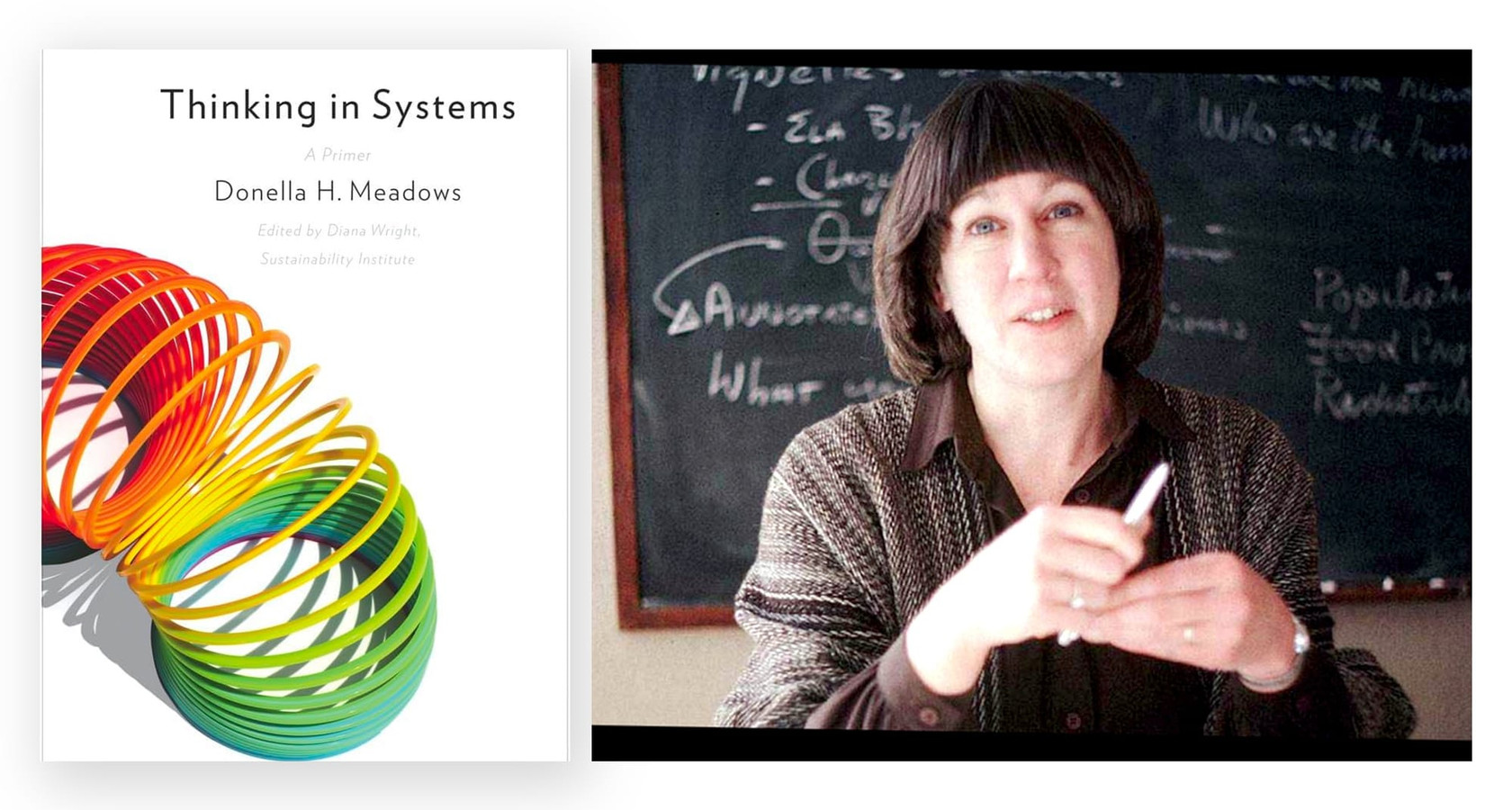

After running into this pattern enough times, I started looking for a better way to think about interconnected problems. That led me to Donella Meadows' book "Thinking in Systems: A Primer" - a short, clear introduction to systems thinking that changed how I approach complex problems, both in engineering and beyond. This post is about what I learned.

What Systems Thinking Actually Is

Most of us are trained to solve problems by breaking them into parts. Find the broken piece, fix it, move on. This works well for simple problems - a flat tire, a syntax error, a burned-out light bulb. But many of the problems we face aren't simple. They involve multiple parts that influence each other, where fixing one thing changes the behavior of something else.

Systems thinking is a way of looking at problems that focuses on the connections between things rather than the things themselves. Instead of asking "what's broken?" it asks "how do these parts interact, and what happens when one of them changes?"

Systems thinking is a discipline for seeing wholes. It is a framework for seeing interrelationships rather than things, for seeing 'patterns of change' rather than static 'snapshots.'

Here's a simple example. Say a city has a traffic congestion problem. The obvious fix is to build more roads. But what actually happens? More roads make driving easier, which encourages more people to drive instead of taking public transit, which eventually fills up the new roads too. The "solution" made the problem worse over time. A systems thinker would step back and ask: what's driving congestion in the first place? Is it a road problem, a housing problem, a public transit problem, or all three interacting with each other?

That shift - from fixing symptoms to understanding the underlying structure that produces them - is the core of systems thinking.

The Building Blocks

Meadows breaks systems down into a few fundamental concepts. Once you understand these, you start seeing them everywhere - in software, in organizations, in ecosystems, in your own habits.

Stocks and Flows

A stock is anything that accumulates - water in a reservoir, money in a bank account, bugs in a backlog, trust in a relationship. A flow is what adds to or drains from that stock. The faucet fills the bathtub; the drain empties it. The water level at any moment depends on the balance between the two.

This sounds obvious, but it's surprisingly useful. Many problems that seem sudden actually built up gradually through an imbalance between inflows and outflows that nobody was watching. A team doesn't burn out overnight - it happens when the flow of work coming in consistently exceeds the team's capacity to process it, and nobody adjusts either side. By the time someone notices, the stock of exhaustion has been accumulating for months.

Feedback Loops

Feedback loops are where systems thinking gets really interesting. There are two kinds, and understanding the difference between them explains a lot about why systems behave the way they do.

Reinforcing loops amplify change. They push a system in one direction, faster and faster. Think of compound interest: money earns interest, which earns more interest, which earns even more. Or think of a viral social media post: more views lead to more shares, which lead to more views. Reinforcing loops can drive explosive growth - but they can also drive explosive collapse. A small crack in customer trust, left unaddressed, can spiral into a full reputation crisis as negative word-of-mouth feeds on itself.

Balancing loops resist change. They push a system back toward equilibrium, like a thermostat. When the room gets too cold, the heater turns on. When it gets warm enough, the heater turns off. Your body's temperature regulation works the same way. In organizations, budgets act as balancing loops - when spending exceeds the target, pressure builds to cut costs, pulling spending back toward the budget.

Most systems contain both types of loops interacting with each other. Understanding which loops are dominant at any given moment tells you a lot about where the system is heading.

Delays

One of the most underappreciated aspects of systems is that cause and effect are often separated by time. You make a change today, but the consequences don't show up for weeks or months. This delay is what makes systems so hard to manage intuitively.

Think about hiring. A team is overwhelmed, so you hire three new people. But it takes months for them to ramp up - and in the meantime, existing team members are spending time onboarding them instead of doing their own work. For a while, adding people actually makes things slower. If you don't understand the delay, you might panic and hire even more people, making the problem worse. This is exactly the kind of trap that systems thinking helps you anticipate.

Leverage Points

Not all interventions are equal. In any system, there are places where a small change can produce a large effect - Meadows called these leverage points. The challenge is that they're often not where you'd expect.

In software, a leverage point might not be fixing the slowest function - it might be changing the architecture so that function doesn't need to run at all. In an organization, the highest leverage point is often not the process or the tooling but the mental models and goals that shape how people make decisions. Change someone's understanding of what success looks like, and their behavior changes across everything they do - without needing a new policy for each individual action.

A system is more than the sum of its parts. It may exhibit adaptive, dynamic, goal-seeking, self-preserving, and sometimes evolutionary behavior.

Systems Traps

Meadows identified several common patterns where well-intentioned actions backfire because people didn't think about the system as a whole. She called these "systems traps," and once you learn to recognize them, you start seeing them everywhere.

Policy Resistance

This happens when a solution triggers reactions from other parts of the system that cancel out the intended effect. A classic example: a government imposes strict fishing regulations to protect declining fish populations. The intent is good. But fishermen who depend on fishing for their livelihood respond by fishing illegally or moving to unregulated areas. The regulation creates the very behavior it was trying to prevent.

The systems thinking approach is to involve all the stakeholders in designing the solution. Instead of imposing a regulation from the top, find ways to align the fishermen's economic interests with conservation goals - through subsidies for sustainable practices, alternative income programs, or community-managed quotas. When people are part of the solution, they stop working against it.

Tragedy of the Commons

When a shared resource has no limits on usage, everyone acts in their own short-term interest, and the resource gets depleted. Overfishing in open waters, overgrazing on shared land, burning fossil fuels in a shared atmosphere - the pattern is always the same. Each individual's action is rational in isolation, but collectively, they destroy the resource everyone depends on.

The fix requires creating feedback that connects individual actions to collective consequences. Quotas, usage fees, transparent monitoring - anything that makes the cost of overuse visible and immediate rather than hidden and delayed.

Fixes That Fail

This is the trap I see most often in engineering. You apply a quick fix that addresses the symptom but not the root cause. The symptom goes away temporarily, so the underlying problem gets ignored. Then the symptom comes back, often worse than before, and you apply another quick fix. The cycle repeats.

Air conditioning is a good macro example. It solves the immediate problem of heat, but the energy it consumes contributes to the warming that made air conditioning necessary in the first place. In software, this looks like adding caching layers to mask a slow database query instead of fixing the query itself. Each new cache adds complexity, and eventually you're debugging the caching system instead of solving the original performance problem.

The systems thinking response is to ask: "What is this fix actually doing to the underlying structure?" If it's making the root cause easier to ignore, it's not a fix - it's a delay.

A system is not the sum of the behavior of its parts; it's the product of their interactions.

Seeing Systems in the Real World

Climate change is probably the clearest example of why systems thinking matters. It's not one problem - it's a web of interconnected systems (environmental, economic, social, political) all influencing each other in ways that make simple solutions impossible.

Consider the feedback loops at work. Melting polar ice is a reinforcing loop: ice reflects sunlight, but as it melts, the darker ocean absorbs more heat, which melts more ice, which exposes more ocean. Meanwhile, increased CO2 can stimulate plant growth, creating a balancing loop where more vegetation absorbs more carbon. These loops are running simultaneously, and the question is which ones dominate.

The delays are enormous. Carbon emitted today will affect temperatures for decades. Policies implemented now won't show measurable results for years. This makes it incredibly hard to build political will, because the feedback between action and result is too slow for most decision-making cycles.

And the leverage points aren't where most people look. Adding solar panels is useful, but it's a relatively low-leverage intervention. Higher-leverage points include changing the economic incentive structures (carbon pricing), shifting cultural norms around consumption, and redesigning urban infrastructure so that low-carbon lifestyles become the default rather than a conscious choice. These are harder to implement, but they change the system itself rather than just adding patches on top.

This is what systems thinking does well: it helps you see that climate change isn't a technical problem with a technical solution. It's a systems problem that requires understanding how technology, economics, behavior, and policy interact - and finding interventions that work with those interactions rather than against them.

Key Takeaway

Coming back to where I started - as an engineer, the most valuable thing systems thinking gave me wasn't a specific tool or technique. It was a habit of asking different questions. Instead of "what's broken?" I now ask "what structure is producing this behavior?" Instead of "how do I fix this symptom?" I ask "what feedback loops are keeping this problem in place?"

These questions don't always lead to faster solutions. Sometimes they slow you down, because understanding a system takes more effort than just patching the most visible symptom. But the solutions you arrive at tend to actually stick - because you're addressing the structure that produces problems, not just the problems themselves.

If this way of thinking resonates with you, I'd recommend starting with Meadows' book. It's short, clearly written, and full of examples that make the concepts concrete. And here's a lecture by Meadows herself on system dynamics that captures her thinking beautifully.